When looking at Google Analytics data, sometimes your just one click away from an insight that will change your business.

One simple trick could be the difference between seeing something valuable and not.

Here are three Google Analytics tricks will help you quickly get the business insight you need from your data.

1) Weighted analytics

I’ve been working to get our bounce rate down here on The Daily Egg.

So, I fired up Google Analytics and decided to take a look at some individual pages that were showing high bounce rates to see if I could find any similarities.

Seems like it would be easy to determine where to start but not so much. When you are dealing with large amounts of data, you will usually have outliers. And these outliers can make it impossible to see anything of value.

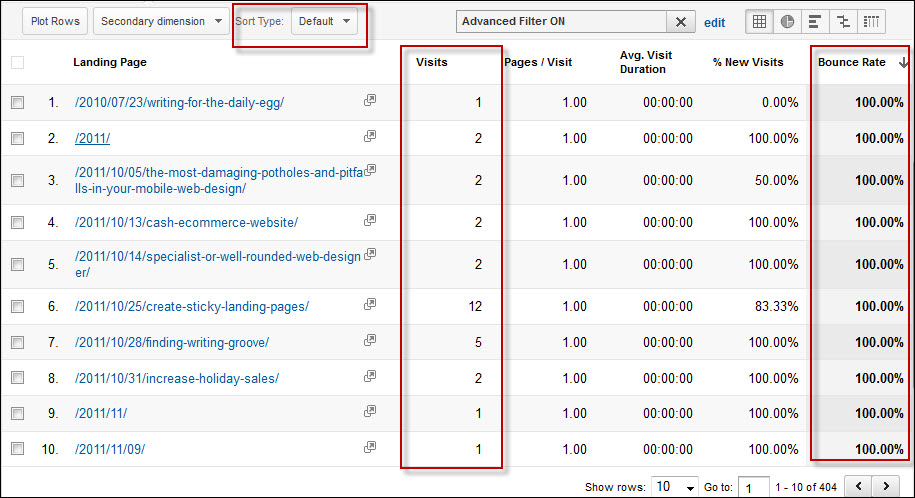

In this case, I have a number of pages that have 100% bounce rate but very few visits. This isn’t doing me any good. It wouldn’t be worth my time to investigate a page with 1 or 2 visits.

Click to enlarge

This is the data with the “default” sorting method.

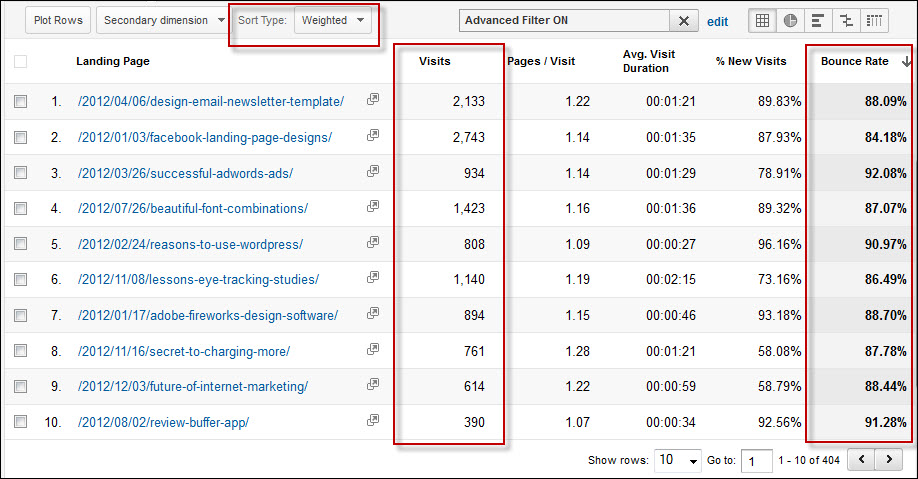

Fortunately, Google Analytics has me covered. By changing the SORT TYPE from DEFAULT to WEIGHTED, I can suddenly see where to start my research.

I won’t go into the magic pixie dust that makes weighted sorting work but suffice it to say that it takes into account traffic volume to bring significant data to the forefront.

Click to enlarge

This is the data with the “weighted” sorting method

2) Data-over-time intervals

Back in the summer, I activated the dormant Crazy Egg Facebook Page. Nothing too crazy, just posting our articles for our fans and occasionally a status update when I found something interesting to share.

As the end of the year approaches, I would like to see if it makes sense to ramp up our presence on Facebook in 2013.

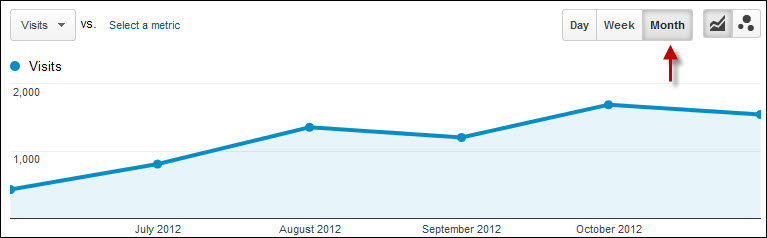

But looking at data over time can be tricky if you don’t select the right intervals.

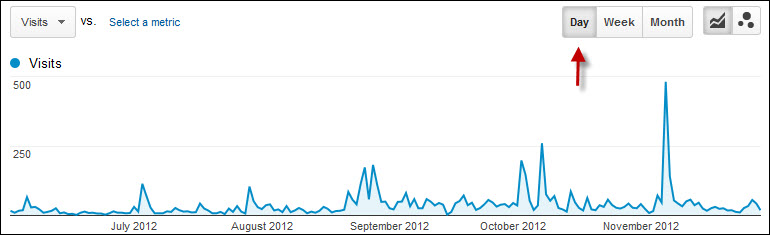

In this case, going back 6 months and displaying information by the day is basically worthless. This view might be OK if I was trying to pinpoint the exact Facebook status updates that did well at driving traffic.

But I just want to know if the posting to Facebook over the last six months has had any affect in the aggregate.

Data over time shown daily

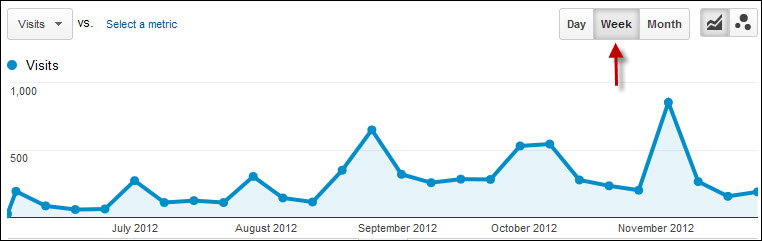

When I view the data by the week, I get a much clearer picture of this data. This view would be perfect if I was, for example, trying to determine if a Facebook contest I ran generated more traffic than usual. But, again, that’s not what I am looking for today.

Data over time shown weekly.

Viewing the data monthly shows me that our small amount of activity on Facebook has produced more traffic. This view is (as Goldilocks would say) just right to answer this particular question.

Ahh, now that’s better. Data over time shown monthly

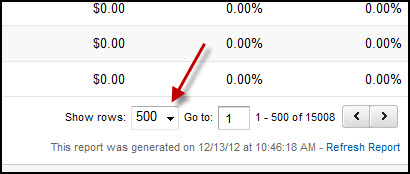

3) Export more than 500 rows in Google Analytics

Sometimes you just need to get out of Google Analytics and into a spreadsheet to get the insight you need.

In my case, I wanted to take a look at our % of email subscriber conversions by organic keyword. Understanding the keywords that convert best assists me in making a number of SEO and editorial decisions. When I do analysis like this, I like to get the data into Excel where I can do some manipulation that can’t be done in Google Analytics.

But Google Analytics limits the number of rows you can export to 500.

In October of 2012 alone, we have visits from over 15,000 different organic keywords. If I were to export all of them separately and stitch them together in a spreadsheet, I would have to export 30 times. Ack!

That is, until I learned this easy hack.

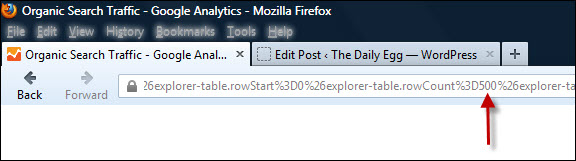

First, select ’500′ as the number of rows to display in your report.

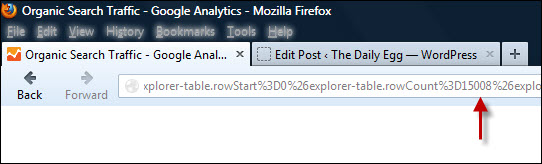

Then, find that 500 in the big long ugly URL string as pictured below.

Change that number to the desired number of rows you would like to export, in my case 15,008. I believe 20,000 is the upper limit for this hack and besides, if you go too crazy with the number of rows you are exporting you will crash your browser on the next step.

After you alter the URL, press the Enter key to load the new URL.

Now you are ready to Export as usual.

So, what do you think of these Google Analytics tricks? Do you know any of your own you could share with us?

Source : http://blog.crazyegg.com/2012/12/14/google-analytics-tricks/

Some are reporting that when you login to your AdWords account and click on the tools drop down and select the keyword tool, Google won't take you to the keyword tool. Instead of Google taking you to the keyword tool, Google is taking you to the new keyword planner tool.

Some are reporting that when you login to your AdWords account and click on the tools drop down and select the keyword tool, Google won't take you to the keyword tool. Instead of Google taking you to the keyword tool, Google is taking you to the new keyword planner tool. Author Bio : Barry Schwartz is the CEO of RustyBrick, a New York Web service firm specializing in customized online technology that helps companies decrease costs and increase sales. Barry is also the founder of the Search Engine Roundtable and the News Editor of Search Engine Land. He is well known & respected for his expertise in the search marketing industry. Barry graduated from the City University of New York and lives with his family in the NYC region. You can follow Barry on Twitter at @rustybrick or on Google + and read his full bio over here.

Author Bio : Barry Schwartz is the CEO of RustyBrick, a New York Web service firm specializing in customized online technology that helps companies decrease costs and increase sales. Barry is also the founder of the Search Engine Roundtable and the News Editor of Search Engine Land. He is well known & respected for his expertise in the search marketing industry. Barry graduated from the City University of New York and lives with his family in the NYC region. You can follow Barry on Twitter at @rustybrick or on Google + and read his full bio over here.